Page 275 - Contributed Paper Session (CPS) - Volume 4

P. 275

CPS2222 Abdullah M.R. et al.

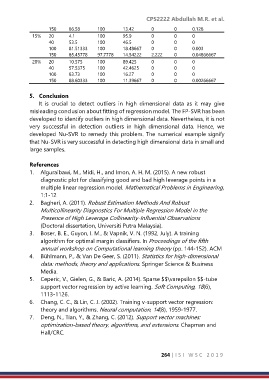

150 86.58 100 13.42 0 0 0.128

15% 20 4.1 100 95.9 0 0 0

40 53.5 100 46.5 0 0 0

100 81.51333 100 18.48667 0 0 0.003

150 85.45778 97.7778 14.54222 2.222 0 0.04866667

20% 20 10.575 100 89.425 0 0 0

40 57.5375 100 42.4625 0 0 0

100 83.73 100 16.27 0 0 0

150 88.60333 100 11.39667 0 0 0.00266667

5. Conclusion

It is crucial to detect outliers in high dimensional data as it may give

misleading conclusion about fitting of regression model. The FP-SVR has been

developed to identify outliers in high dimensional data. Nevertheless, it is not

very successful in detection outliers in high dimensional data. Hence, we

developed Nu-SVR to remedy this problem. The numerical example signify

that Nu-SVR is very successful in detecting high dimensional data in small and

large samples.

References

1. Alguraibawi, M., Midi, H., and Imon, A. H. M. (2015). A new robust

diagnostic plot for classifying good and bad high leverage points in a

multiple linear regression model. Mathematical Problems in Engineering,

1:1-12

2. Bagheri, A. (2011). Robust Estimation Methods And Robust

Multicollinearity Diagnostics For Multiple Regression Model in the

Presence of High Leverage Collinearity-Influential Observations

(Doctoral dissertation, Universiti Putra Malaysia).

3. Boser, B. E., Guyon, I. M., & Vapnik, V. N. (1992, July). A training

algorithm for optimal margin classifiers. In Proceedings of the fifth

annual workshop on Computational learning theory (pp. 144-152). ACM

4. Bühlmann, P., & Van De Geer, S. (2011). Statistics for high-dimensional

data: methods, theory and applications. Springer Science & Business

Media.

5. Ceperic, V., Gielen, G., & Baric, A. (2014). Sparse $$\varepsilon $$-tube

support vector regression by active learning. Soft Computing, 18(6),

1113-1126.

6. Chang, C. C., & Lin, C. J. (2002). Training v-support vector regression:

theory and algorithms. Neural computation, 14(8), 1959-1977.

7. Deng, N., Tian, Y., & Zhang, C. (2012). Support vector machines:

optimization-based theory, algorithms, and extensions. Chapman and

Hall/CRC.

264 | I S I W S C 2 0 1 9