Page 305 - Contributed Paper Session (CPS) - Volume 7

P. 305

CPS2105 Hermansah et al.

Figure 2: The plot of the actual data and forecasting results of ARIMA

models

b. Modeling with Neural Network

The architecture of the Back-propagation Neural Network (BPNN) is

determined by trial and error on several types of architecture. The activation

function used is the bipolar sigmoid function for the input layer to the hidden

layer and the linear function for the hidden layer to the output layer. The

training model in BPNN was chosen, namely resilient back-propagation. The

parameters chosen are based on the training model selected default with the

specified value. Determining the best model is also done by considering the

smallest MSE and MAPE values.

With 225 training data and 12 testing data, the best architecture of BPNN

by trial and error obtained 4 input layers, 7 hidden layers and 1 output layer

with MSE values of 1,2639 and MAPE of 0,0046 or 0,46%. The best model

obtained is used for forecasting testing data. Obtained forecasting data with

MSE of 2,3735 and MAPE of 0,0075 or 0,75%. Because the MAPE value is below

10%, so it can be concluded that the model has a good performance. Figure

forecasting results with actual data are as follows:

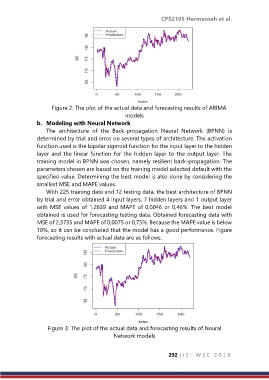

Figure 3: The plot of the actual data and forecasting results of Neural

Network models

292 | I S I W S C 2 0 1 9